‘Constrained agency’ is becoming the rule in Enterprise: AI handles the heavy lifting, while humans make the decisions. Context is the next challenge.

This is the second of a three-part post about a few key insights and findings that I came across when attending the Digital Production Partnership (DPP) Leaders Briefing 2025 in London. The DPP is one of the most important trade associations in the media industry that brings together senior executives from major media organizations. More than 1,000 attendees came to this conference held on November 18 and 19.

The transformation from AI demos to production systems is underway across media and entertainment. But the more interesting story isn’t that AI has arrived, it’s where it’s actually working, where it’s struggling, and what infrastructure changes are required to unlock the next wave of value.

Where AI Is Delivering Real Value in the Media Enterprise space

Use Case 1: Ingest and Metadata. The Highest-Leverage Point for Automation

High-quality and multimodal metadata at point of ingestion lies at the heart of building intelligent and robust AI systems further downstream in the media supply chain.

The standout finding from recent industry surveys such as the DPP’s CEO and CTO surveys is that metadata generation and enrichment, particularly speech-to-text and automated speech recognition, has crossed the adoption chasm. More than 80% of organizations are either encouraging or actively implementing these capabilities.

The logic is sound. The oldest rule in data engineering applies: garbage in, garbage out. If your metadata is incomplete, inconsistent, or wrong, everything downstream suffers. Search breaks. Rights management fails. Compliance becomes manual. Smart organizations have realized that the front door of the supply chain is the highest-leverage point for automation.

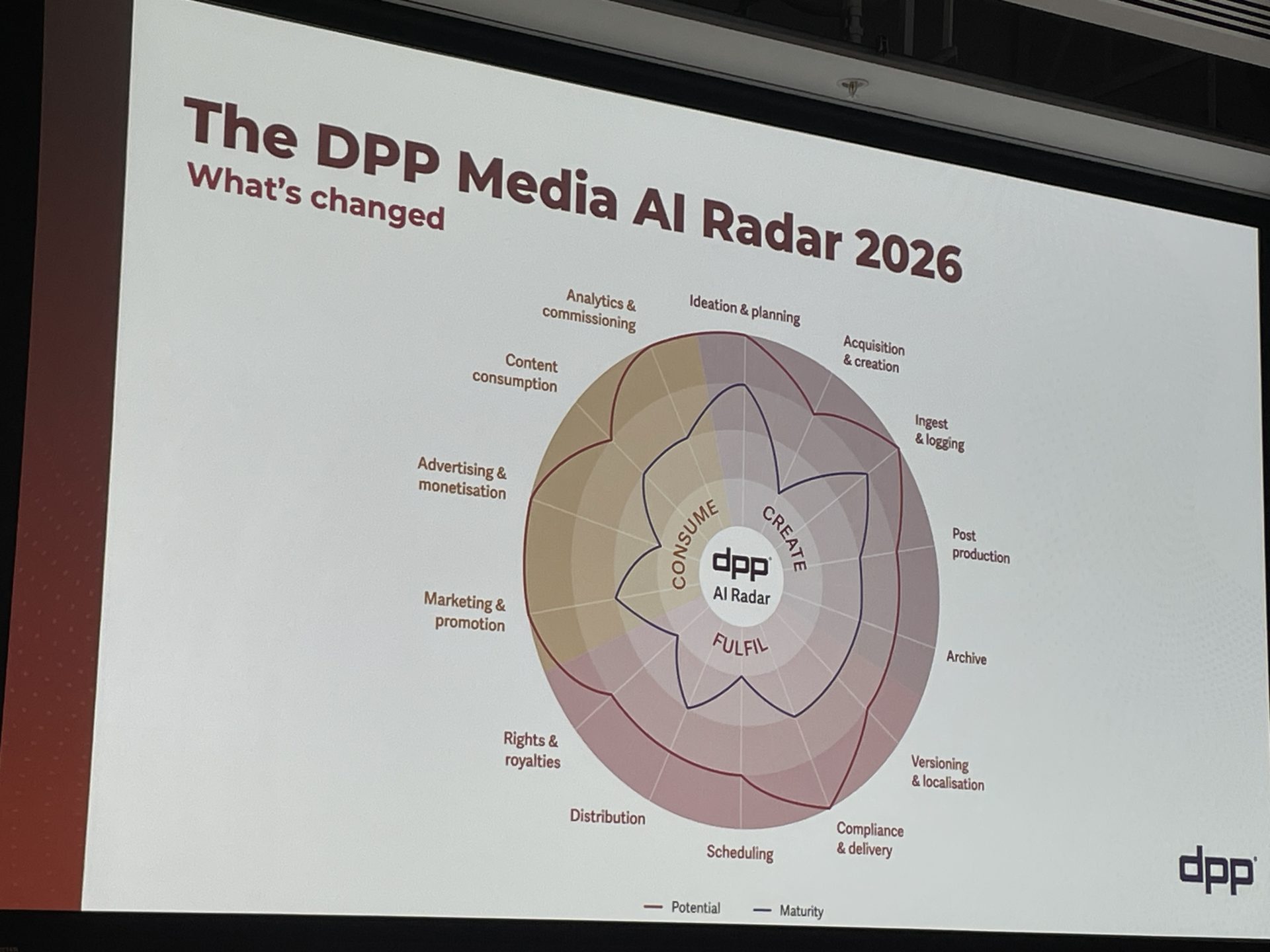

The DPP’s Media AI Radar 2026, based on industry interviews, forecasts higher adoption of AI in ideation, planning, analytics, commissioning, compliance, video marketing, advertising, and ingest/logging.

The more sophisticated implementations involve what’s being called “agentic orchestration”: AI systems that don’t just transcribe content but actively monitor ingestion workflows for anomalies.

For example, a file arrives with metadata that doesn’t match expected formats or the level of depth and accuracy required. Maybe the localization markers are wrong, or the language code contradicts the filename. Traditional workflows would let that error flow downstream until a human spotted it.

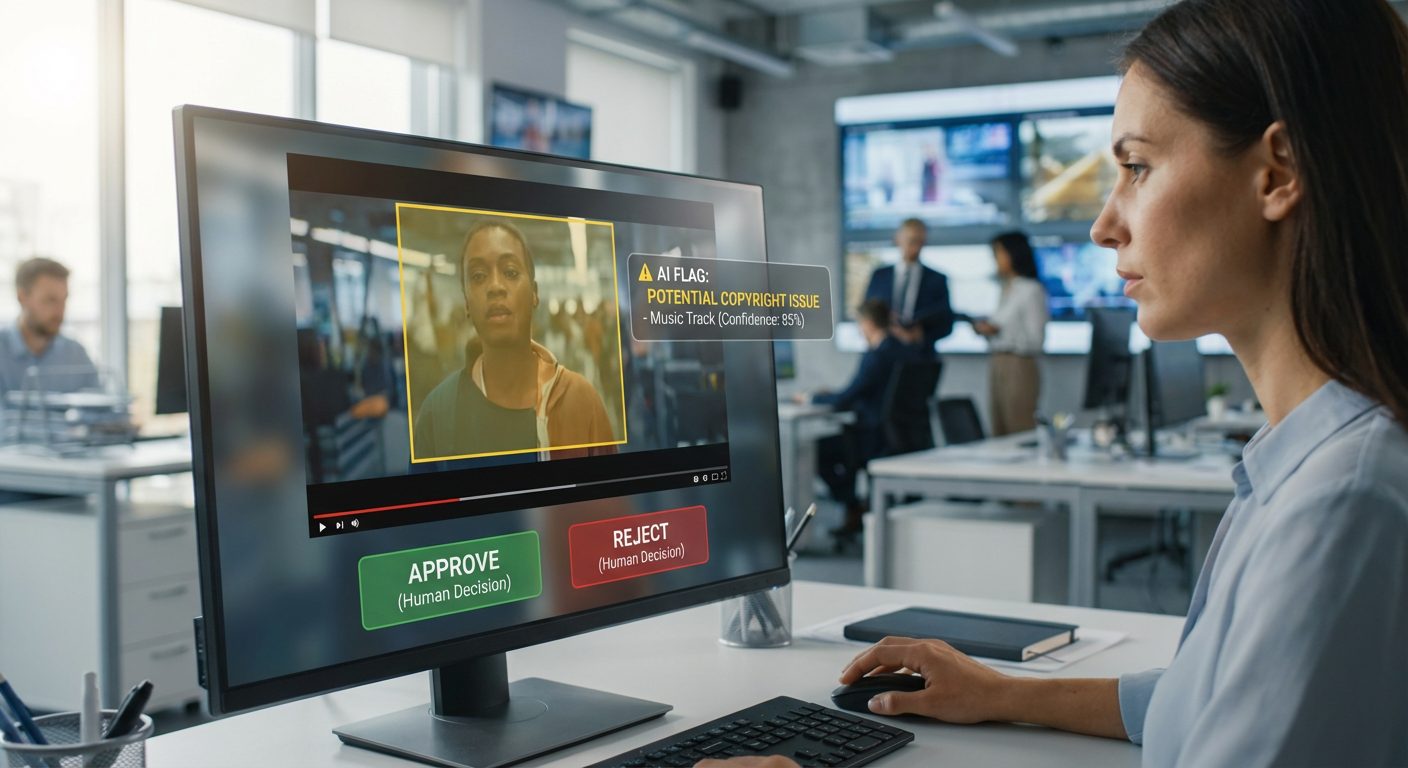

With agentic orchestration applied in Q/C, the system flags the mismatch immediately, provides context, and prompts investigation. A person remains in charge, operating with better information and recommendations earlier in the process.

However, as we will see later in this post, agentic AI doesn’t just need highly accurate metadata but also ways to access content and data silos in a more fluent, robust, and secure way. This remains a challenge for large organizations.

Use Case 2: Ingest and Metadata. The Highest-Leverage Point for Automation

AI is heavily disrupting the localization industry. Yet, the media industry continues to maintain a high bar for quality dubbing and audio descriptions. Humans and AI together produce the best output.

Subtitling, captions, and synthetic dubbing are seeing strong adoption (especially the first two), but with an important caveat: only 14% of teams find auto-generated captions “fully usable” without human review, and 75% cite timing and sync issues as their primary complaint.

Bad subtitles aren’t just annoying, they’re trust-destroying. The pattern emerging is AI-assisted drafting plus mandatory human review. Speech-to-text models generate initial captions. LLMs detect sync problems and propose corrections. Linguistic experts review the final files. The AI handles grunt work; humans ensure quality.

Use Case 3: Marketing and Promotion – Dramatic Time Savings

Beyond the hype. Automated and semi-automated video repurposing is proving to be a growing revenue pipeline for companies with long-form content, such as those in Sports, and a key way to monetize deep high-quality catalogues.

This is where AI delivers some of the most compelling efficiency gains and this goes beyond flashy Nano Banana text to video generative AI. A single piece of premium long-form content (sports matches, TV episodes, etc) might need dozens of promotional assets tailored for different platforms, audiences, and campaigns.

These are AI tools that can analyze content, identify emotional beats, extract compelling sequences, and generate draft cuts in minutes. What used to take days happens in hours or minutes. This is one of the use cases driving the highest demand for Imaginario AI, and this cuts across media, entertainment, sports, news and even SMB content.

In the case of Imaginario AI, we have seen our Enterprise clients save between 50% and 75% in searching deep catalogues, navigating dailies, and generating social media compilations or single cuts. This is quantifiable ROI.

Use Case 4: Contextual Ad Discovery and Insertions. The Next Frontier in Programmatic Advertising

AI contextual ad discovery understands the nuance of content to place ads in the perfect environment. This enables highly personalized creative that align with a user’s immediate mindset and mood, boosting engagement without relying on personal data.

AI tools can analyze narrative structure, detect scene transitions, and suggest non-intrusive ad slots based on emotional pacing. They can also explain their reasoning, which is critical for creative buy-in. The tools that work don’t make autonomous placement decisions. They suggest options, show reasoning, and make overrides easy.

The Maturity Gap: Where We Actually Are

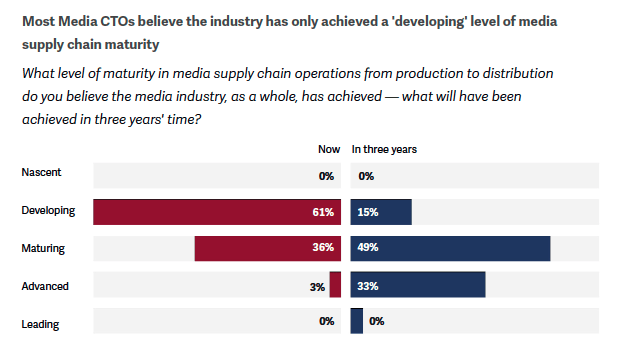

For all this progress, humility and baby steps are still in order. When surveyed by the DPP about media supply chain maturity, 61% of respondents said “developing” and 36% said “maturing.” Only 3% called it “advanced.” Nobody, not a single respondent, said “leading”.

The vast majority of media companies are still developing their cloud, interoperability and AI capabilities. They expect to reach a maturity stage in three years. Sources: DPP CTO Survey 2025.

Projected three years ahead, roughly half expect to be maturing, a third hope for advanced, and just 3% think the industry will be truly leading.

We’re in the early innings. The teams deploying AI today are pioneers, not late adopters. Most organizations are still figuring out use cases, governance, training, and change management. The technology is ahead of organizational readiness.

Co-Pilot, Not Autopilot: The Design Pattern That Works

The highest gains are seen when humans and AI do what they do best: AI handles data processing, automation of repetitive tasks, and generating drafts. Humans use critical judgement, context, creativity, and empathy.

Across trust, integration, governance, security, and perceived technology maturity, concern levels spike whenever AI autonomy increases. People want smart assistants. They do not want self-driving compliance, QC, or editorial decisions.

The pattern that’s working: constrained agency. AI with enough freedom to do meaningful work, but within boundaries that remain legible and controllable by humans. The level of acceptable autonomy varies by use case. Legal compliance has a higher accuracy bar than generating marketing highlights, but the principle holds.

This isn’t because the technology can’t handle more autonomy. It’s because organizations aren’t ready to trust it, and more importantly, because accountability matters. When something goes wrong, someone has to answer for it. That someone needs to be a human.

As one technology vendor put it perfectly during the DPP Leadership Summit last November in London: “AI handles the heavy lifting; humans handle the decisions.”

The Enterprise Challenge: A Fragmented Context

AI implementations often stall because essential enterprise context is locked in fragmented systems and unstructured media formats like video and audio. Making diverse data accessible is critical insfrastructure work required for scalable AI success.

For AI agents to be effective in the enterprise, they need enterprise context. That context sits in contracts, financial documents, research, marketing assets, meeting notes, conversations, and every other piece of information across the organization. By volume, most of this data is unstructured and remains both on-premises and in the cloud. There is no one-size-fits-all solution, as each organization is at a different stage of digitalization and cloud development.

Your customer information might live in HubSpot. Projects are in Monday. Engineering issues sit in Jira. Financials are in Xero or SAP. Your product catalog exists in a different database and MAM systems. Branding and marketing is scattered across DAMs and creative toolkits like Adobe or Avid.

Enterprise context is highly fragmented and requires bespoke integrations, taxonomies, and adapted schemas that ensure data consistency and make it easier for different systems to understand and exchange data. Without this, even the smartest AI still won’t know your organization and your workflows, therefore providing inaccurate information and potential hallucinations.

This fragmentation is why so many AI implementations stall after promising pilots. The AI works brilliantly on a single data source but struggles when it needs to compose context from multiple systems to answer real business questions.

And there’s another layer of complexity: a massive portion of enterprise context is locked in complex media formats like video, audio, and images that agents can’t natively process. Making these formats truly legible to AI is essential infrastructure work that most organizations haven’t yet addressed. Until this unstructured content becomes structured and searchable, AI agents will operate with significant blind spots.

The only way AI agents will be successful at scale is if they have access to the right information, in the right structure, at the right time, in a secure and well-governed way. AI will expand the use and value of this information by orders of magnitude over time, but only if the infrastructure exists to make it accessible.

MCP and the Agentic Architecture Shift

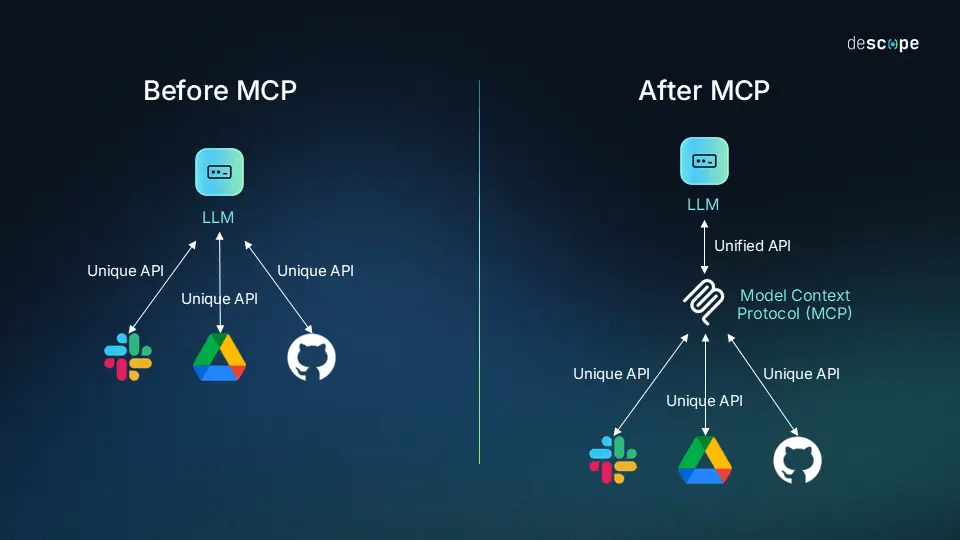

A single contextual layer to rule all APIs and bespoke integrations in the Era of AI: Model Context Protocol (MCP). Source: Descope.

This fragmentation problem is exactly why the Model Context Protocol (MCP) ecosystem has become strategically important, even with APIs available.

MCP, originally developed by Anthropic and now donated to the Linux Foundation’s new Agentic AI Foundation, provides MCP clients with a universal, open standard for connecting AI applications to external databases, servers, and third-party systems. It’s not just about connecting agents to a system, it’s about composing context from all the systems that collectively hold your enterprise’s cognitive reality.

The numbers tell the story of rapid adoption: over 10,000 active public MCP servers exist today, covering everything from developer tools to Fortune 500 deployments. MCP has been adopted by ChatGPT, Cursor, Gemini, Microsoft Copilot, Visual Studio Code, and other major AI products. Enterprise-grade infrastructure now exists with deployment support from AWS, Cloudflare, Google Cloud, and Microsoft Azure. This will likely become the underlying glue of AI.

What we’re seeing emerge is an evolution from atomized MCPs (reflecting individual APIs) to aggregated, orchestrated context layers that can assemble meaningful context on demand. The winners in the AI-enabled enterprise won’t just be the platforms that secure their own data well. They’ll be the ones that embrace composability and make their context genuinely AI-visible.

The agentic future requires infrastructure that makes context available, structured, and secure across the entire SaaS ecosystem. That’s the architecture shift everyone is building toward.

What This Means for the Next Wave

If you’re building AI Enterprise tools for media or implementing them in your organization:

- Prioritize the ‘front door’. The highest-leverage automation opportunities are at ingest and metadata generation at scale. Get that right, and value compounds downstream. Note: Imaginario AI provides labeled and vector-based contextual understanding and can help your organization in this part of the supply chain.

- Design for transparency. If your AI makes recommendations, show your reasoning. Black boxes don’t scale where trust must be earned through explainability.

- Assume human review first. The workflows that work (today) are ones where AI drafts and humans approve. Plan for that from the start, optimize for automation later.

- Solve the fragmentation problem. Invest in infrastructure that makes enterprise context composable, secure, and AI-visible across your SaaS ecosystem. MCP adoption is accelerating for good reason. APIs are rapidly adapting to support MCP and Imaginario AI is not the exception.

- Build for constrained agency. Give your AI enough freedom to do real work, but keep it within boundaries humans can understand and control.

The transformation is messy, uneven, and slower than the hype cycle suggests. But it’s happening. AI is moving from the margins to the mainstream, from idiotic hype to efficient supply chains.

We’re not at full automation for most workflows, as context and personalization at scale still need to be completely solved. However, we’re at something more mundane and more valuable: AI as co-pilot, lifting cognitive weight and letting humans focus on judgment and creativity.

The infrastructure work, making enterprise data composable, structured, and AI-visible, is less glamorous than demos of agents completing complex tasks autonomously. But it’s the foundation everything else depends on. The organizations that invest in this plumbing now will be the ones positioned to capture value as agentic capabilities mature.

That might not make for breathless keynote presentations. But it’s the future that’s actually getting built.leaders in the first place (hint: premium content).

About Imaginario.ai

Backed by Techstars, Comcast, and NVIDIA Inception, Imaginario AI helps media companies turn massive volumes of footage into searchable, discoverable, and editable content. Its Cetus™ AI engine combines speech, vision, and multimodal semantic understanding to deliver indexing, simplified smart search, automated highlight generation, and intelligent editing tools.